With the advent of Big Data and analytics, the world has changed in ways previously unimaginable. In a rapidly growing and thriving industry such as the motion picture industry, data analytics has opened a number of important new avenues that can be used to analyze past data, make creative marketing decisions, and accurately predict the fortunes of impending movie releases.

The timing of the movie release is critical to the success of a movie. To facilitate the release date selection, studios decide and pre-announce the targeted release dates on a weekly basis long before the actual release of their forthcoming movies. Their choices of release dates and then the subsequent changes are strategic in nature taking into consideration various factors like regional holidays, cultural events, political situation, sports events etc. Predictive analytics using the historical movie release data and their box office performance can help us identify the ideal release date of the movie to maximize performance at the box office.

Consider a scenario where a movie has already been slated for release on a particular date. Suddenly, a competitor movie is announced and the production house should decide whether it should go ahead with the release or make any changes.

Business Challenge:

Determining optimal release date for a movie: leveraging analytics to enhance box office success rate for the movie.

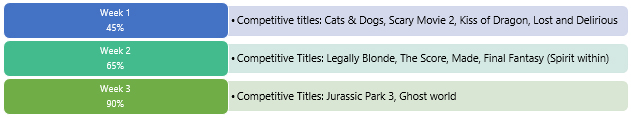

‘Cats & Dogs’ and ‘America’s Sweethearts’ were scheduled to release on July 04, 2001. To avoid competition, ‘America’s Sweethearts’ was moved forward by a week to July 13, 2001 but soon a new entrant, ‘Legally Blonde’ was announced to be released on July 13, 2001. With a number of new players, what can be done to optimize release date for box-office success?

Approach

Social media analytics can be used to predict the optimal release date for a movie. Using data collected from social media channels, we can gauge expectations of the target audience and the buzz towards the movie.

Collection of Data

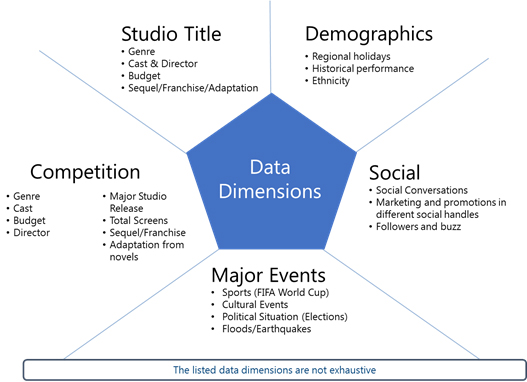

There are lot of macro and micro economic factors that affect the release date of a movie. Some of the factors that can be measured and factored during analysis are explained in the diagram below. Most of the data can be collected from public sources like IMDB, Rovi etc.

Studio Title

The database will contain historical data of all the movies at a studio and genre level including cast, support and box office performance.

The database will contain historical data of all the movies at a studio and genre level including cast, support and box office performance.

CompetitionData of competitor movies (those getting released in the same week) needs to be analyzed carefully since other movie releases in the same genre will impact the performance of the movie at the box office.

Social Media Analysis

This process involves analyzing social media conversations along with the themes, sentiment and demographic features. This extracted data will help promote creatives that have the potential to create maximum impact and will also help identify the right target audience.

This process involves analyzing social media conversations along with the themes, sentiment and demographic features. This extracted data will help promote creatives that have the potential to create maximum impact and will also help identify the right target audience.

Major Events

Cultural events, sports events like the FIFA world cup, political events – elections, protests etc. also play an important role when it comes to the release of a movie. In these scenarios, it may not be ideal to release the movie during these events as theatre occupancy rates are generally lower.

Cultural events, sports events like the FIFA world cup, political events – elections, protests etc. also play an important role when it comes to the release of a movie. In these scenarios, it may not be ideal to release the movie during these events as theatre occupancy rates are generally lower.

Analysis:

Illustrative Scorecard

All factors considered, the ideal date on which America’s Sweethearts was to be released was decided in order to ensure higher probability of success.

All factors considered, the ideal date on which America’s Sweethearts was to be released was decided in order to ensure higher probability of success.

A database with the release calendar provided information on competitors release, genre, budget etc. It is important to create a set of rules that satisfy the success criteria (which can be generated based on CART/ Decision Tree Rules Generation using historical data.)

Inferences:

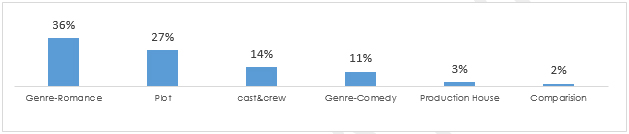

- The data was collected from social media by using keywords relevant to the movie; thereafter, using text mining, top themes were extracted from the tweet data. Since the movie revolved around a love story, the most popular theme for the movie was the genre, which in this case was romance. The other significant genre which created a buzz in social media was comedy. Using this analysis, the most apt genre for the movie was decided, which was then used to market the movie.

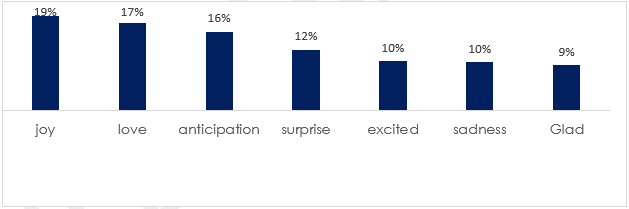

- Using the same social media data, sentiment analysis was carried out. This gave the production house an idea of the public sentiment about the movie. The analysis showed ‘Joy’ as the top sentiment followed by ‘Love’

- We also saw the excitement about the movie release at different levels like, age, gender and location. This helped us identify the age group, gender and location where the movie gained maximum publicity, which will in turn helped with the marketing

For e.g. Women in the age group of 21-24 dominated the social conversations while men in the age group of 26 to 32 had higher social engagement

Business Impact:

The inferences from social media data helped to select the final release date for the movie. Hence, instead of releasing the movie in the second week, it would have been better to release it in the third week since it had 25% more chances of success.

While winning an Oscar might be the ultimate taste of success, winning at the box office is as sweet a measurement of success. Though we cannot promise an Oscar, with analytics we can make sure of the latter!

Note: The engagement numbers and impressions mentioned are for representative purpose only.

*With inputs from Sathish Prabahar